The layman's guide to Artificial Intelligence

All there is to know about the not-so-quiet revolution happening in tech

The mind has fascinated humans for a long time. Science has through time been employed to explain it and today has a dedicated branch known as cognitive neuroscience. With the advent of modern computing, a different but related experiment had arisen –– replicating the mind. This is the discipline known today as artificial intelligence, or AI for short.

AI is a field broadly concerned with simulating human intelligence in machines. While dating back several decades, it has been propelled to the fore by twenty-first century advances in technology. Considerable progress has been made, with machines today being able to “see”, process natural language, make predictions, take decisions, create content, and move around in the physical environment. These abilities, however, currently vary in their depth and breadth when compared to humans –– some are sharper, others not. And current AI systems are generally able to manifest only each at a time –– a limitation known as narrow AI. This contrasts with general AI systems which are able to perform any intellectual task that a human can –– a highly sought-after achievement by some industry practitioners.

Machine learning demystified

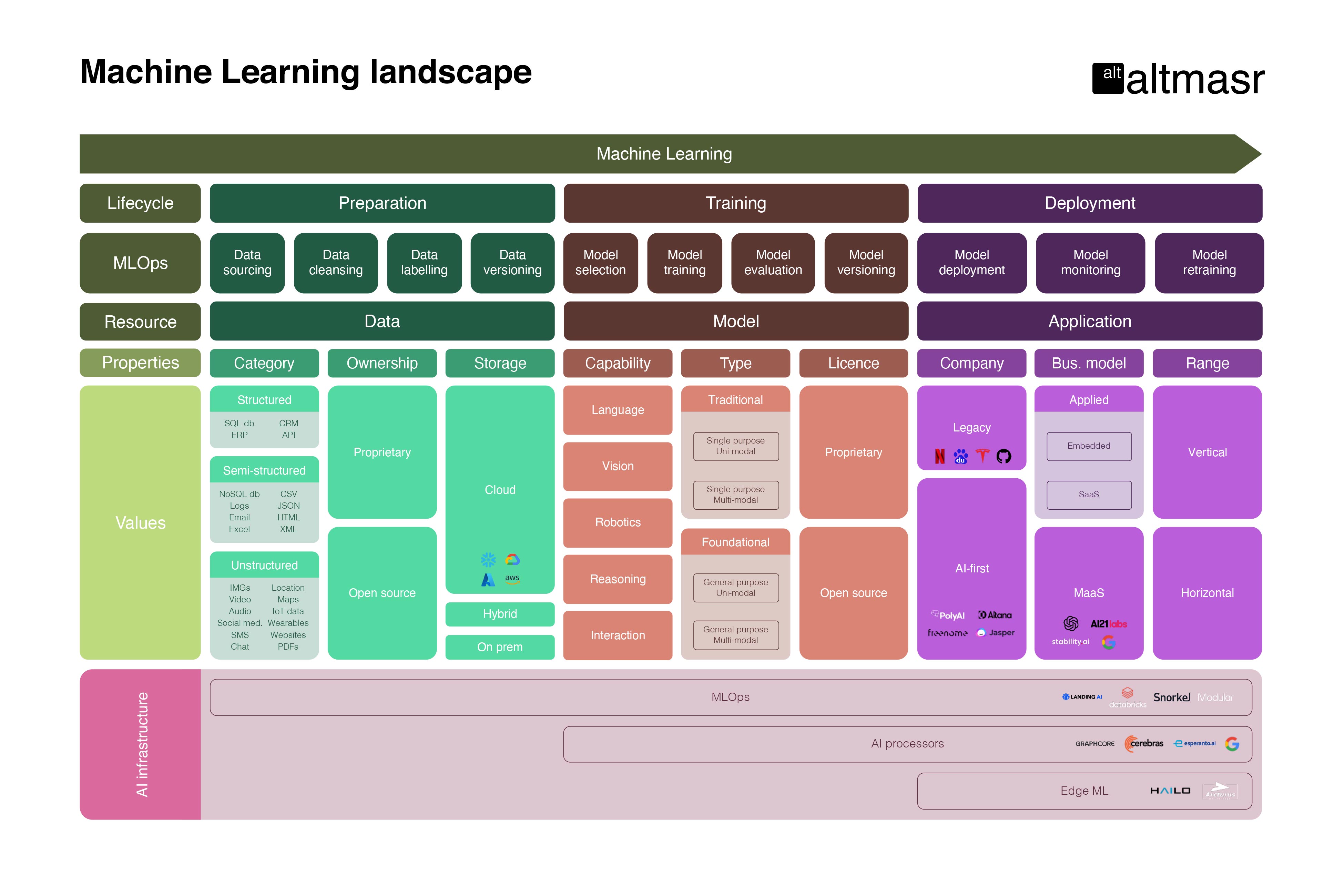

The remarkable thing is that most machines today are not explicitly programmed with instructions on how to perform such complex tasks, which had been the challenging modus operandi in times prior. Instead, machines “learn” how a task is performed by recognizing patterns in data –– a technique called machine learning (ML). This technique has been game changing –– so much in fact that some leading practitioners have hailed it as a paradigm shift in how software is engineered.

Machine learning systems are driven mainly by two elements: data and a model. In plain language, data is fed to a model as “examples”, from which the model gains “experience”, and is then able to make “guesses” from what it has learnt. Imbibing a model with experience is a stage of the process called training, while eliciting guesses from a model is one called inference. These “educated guesses” are what allow AI systems today to manifest sophisticated abilities.

Architecting an ML system involves, amongst other things, defining what task needs to be engineered, what data is required to engineer it, and selecting a suitable model to train based on the nature of task and data.

Deep learning –– machine learning on steroids

Today, there is much more data that machines can learn from than ever before. Increased digitisation, broader internet penetration, as well as the availability of new platforms, apps, and devices have all contributed to this explosion. Where data is scarce, it is also generally easy to create/capture using the ample technologies that exist. Data pipelines have become easier to create, manage, and scale due to the proliferation of cloud computing options. This has allowed ML to flourish and its use cases to grow exponentially.

There have also been significant advances on the model front over the past two decades. ML models are typically computationally expensive to train –– they are based on optimisation algorithms that require considerable computing resources to run on a dataset. Twenty-first century advances in computing power and access to such distributed resources on the cloud have allowed for the training of larger models on larger datasets. A particular class of model that scales very usefully this way is neural networks, which are of an architecture inspired by the structure and function of the human brain.1 Training large neural networks on big datasets is a type of ML called deep learning.2 Deep learning models have proven to be powerful –– they learn with less need for human engineering and generally outperform traditional models.

Foundation models –– an emerging paradigm

There has lately been an outpouring of research into deep learning within both academia and big tech, which has produced new model variants that learn in more meaningful ways –– seemingly providing models with a deeper understanding of the world. Instead of the traditional single-task approach, these models can be pre-trained on large corpuses of data and then adapted to perform a wide range of downstream tasks. They can also learn from multimodal data (e.g. text, images, speech) then be adapted to tasks spanning several modes (e.g. vision and language). Adaptation typically requires additional training on domain-specific data, but a remarkable feature of some of these models is that they can also be adapted via in-context learning –– a process akin to learning on-the-fly by receiving a brief explanation of a task in natural language. Such models are termed foundation models, as a reflection of their platform-like nature and status as an emerging ML paradigm.

Particularly popular applications of foundation models at the moment are conversational AI and AI art –– both forms of generative AI based on language and text-to-image models respectively.

While ML breakthroughs are still mostly confined to the research domain as well as within big tech companies (which are wealthy in data, talent, and financial resources), foundation models, tooling, and a growing open-source community contribute to its democratisation and are expected to accelerate its diffusion from research to industry in the coming years.

Artificial intelligence in practice

AI has the potential to both create considerable economic value as well as increase its propensity to change hands. This has not gone unappreciated at the geopolitical level, with what seems to be an emerging AI race between global powers. This impact will also be felt by businesses across all industries and geographies, with existing businesses adapting to a new playing field (e.g. cloud migration, being data driven, automation) and new ones are emerging because of it, especially within the last few months and years.

The industry’s increasing prominence has resulted in the term “AI” becoming a buzzword that is often used liberally, which can be confusing for many people. To better understand AI companies, it can be useful to classify them into one of three categories: Applied AI, Model-as-a-Service, and AI Infrastructure. Some companies may span several categories.

Applied AI

Applied AI companies are those that leverage AI capabilities to drive their business. These can be legacy companies like Netflix, Baidu, Tesla, or GitHub that have re-engineered existing offerings to use AI, or “AI-first” companies like PolyAI, Altana, Freenome, or Jasper that have built intelligent products from day one. While legacy companies that apply AI have mostly been internet companies, they can also be traditional businesses –– e.g. a hospital that uses an ML model to diagnose conditions from medical imaging, a design office that uses a text-to-3D-object model to render mock-ups.

Applied AI companies can also be classified as vertical or horizontal, meaning they are specific to a particular industry or applicable across industries. For example, Freenome has developed an intelligent cancer detection product (i.e. healthcare), while PolyAI's voice assistants have applications in a variety of industries (e.g. retail, hospitality, banking).

Applied AI companies maintain their competitive advantage through various strategies, such as proprietary data, proprietary models, and vendor lock-in. Newer companies can initially make use of open-source data, open-source models, and foundation models, then differentiate themselves as they build momentum.

Model-as-a-Service

Not dissimilar to Platform-as-a-Service, the past years have seen the emergence of Model-as-a-Service (MaaS) companies. They leverage in-house ML engineering teams and access to significant computing resources to develop proprietary foundation models. Such models are pre-trained on vast amounts of data from various sources, including the internet, and their usage is generally paywalled behind an API or some other UI, with inference taking place on company servers –– a typically expensive incurrence. They can be used as is or adapted for specific use cases –– including by applied AI companies.

While MaaS is a nascent business model, it is expected to multiply in the coming years as foundation models become more mainstream and AI adoption ramps up in industry. Examples of MaaS companies include OpenAI which develops the GPT family of language models (including ChatGPT and GPT-3), the visual language model DALL-E 2, and the text-to-code model Codex; Stability AI which develops the Stable Diffusion family of visual language models; Midjourney which develops eponymous visual language models; Google which hosts the text-to-speech model WaveNet3; and AI21 Labs which develops the language model Jurassic-1.

AI Infrastructure

AI infrastructure is a “backend” industry and encompasses a range of companies whose products support the AI process.

These can be MLOps4 products, which are a suite of software products that help humans in developing and operationalising ML systems. These products can provide workflow management, cloud resources, tooling, and versioning across the entire ML lifecycle, and are considered key catalysts to the adoption of AI in industry. There are many flavours of MLOps out there, including those that specialise in a particular stage of the lifecycle (e.g. data annotation, model fine-tuning), end-to-end solutions, tools for low-code users or experienced engineers, and tools that are specific to a single domain (e.g. vision, language) or applicable to a wide range of them. Cloud providers like Snowflake, Google, Microsoft, and AWS have moved into this space, leveraging their position as entrenched data platforms. “AI-first” companies also compete including Landing AI, Databricks, Snorkel AI, and Modular.

AI infrastructure companies also develop specialised hardware, such as AI microchips. These are designed to accelerate ML applications, as traditional general-purpose processors are inefficient at running heavy ML workloads. Companies that manufacture these microchips often adopt a cloud computing business model, and include Graphcore, Cerebras, Esperanto AI, and Google.

AI microcontrollers are another type of specialised hardware that allow edge devices to handle deep learning inference workloads without needing to send data to a remote server, improving the speed and efficiency of the process. Applications that benefit from this include those that require low-latency inference at the edge, such as autonomous driving and surveillance. Examples of companies that produce these microcontrollers are Hailo AI and Arcturus.

Inferring the future

There are certain trends in AI that are useful to note, especially regarding the future ahead. These trends include the increasing demand for computing power, the growing role of edge AI, the homogenisation of models, the potency of intelligence research, the rising importance of ethics & regulation in the field, the need for workforce reskilling, and the dangers of data concentration.

The jury is still out on whether AI will be able to match the human mind in its singular wonder. Regardless of the outcome –– brace for change.

-

Also known as artificial neural networks to distinguish them from their biological counterparts. More online here. ↩

-

It is useful to think of neural networks as black boxes that take input, produce output, and have a defined number of control knobs. During training, control knob settings are found out by adjusting them according to which overall configuration produces the desired outputs from given inputs. A well-configured black box is then able to produce useful outputs from fresh inputs. Under the hood, this configuration process is carried out by an optimisation algorithm that makes use of mathematical and statistical techniques. In ML-speak, control knobs are known as model parameters, their settings as parameter values, inputs as data, and outputs as predictions. The size of a neural network is defined by how many parameters it has. ↩

-

“DevOps” for machine learning. ↩

#tech #startups #artificial_intelligence #machine_learning #cloud_computing #data